Introduction

It is estimated that today more than 90% of companies are already using some form of cloud services, while by 2023, the public cloud market is projected to reach $623.3 billion worldwide. These statistics highlight the consistent emerging pattern of businesses migrating their infrastructure from on-premises to the cloud. Industry pundits also claim that it’s no longer a question for companies to ask if they should move to the cloud but rather when.

There are several reasons to it. Adopting the cloud offers improved data access, scalability, and application security while achieving enhanced operational efficiency. A projection by Oracle also predicted that companies can save up to 50% on infrastructure expenses by deploying workloads to a cloud platform.

However, transitioning to the cloud comes with its own set of challenges, with a disclaimer that not every cloud migration project goes as smoothly as intended. While there are a number of factors to failure, a lack of planning and insight before cloud migrations are one of the most prominent reasons for an outright failure. This not only means that the organization’s long-term goals to improve operational efficiency goes for a toss but also result in wasted effort, time, and money.

This article addresses the most common challenges to expect when moving from an on-premises setup to a cloud platform, and how to overcome them.

Common Challenges of an On-Prem to Cloud Migration

As an essential best practice, organizations are required to diligently research and assess the most suitable processes, methodologies and plan every step of the migration to ensure the right decisions are made, and costs are controlled. Here are some key considerations that should be followed as the rule of thumb.

Choosing the Right Model and Service Provider

Choosing the right cloud model for a business and the right service provider can not only make or break the migration project, but also affect its future maintenance and sustainability.

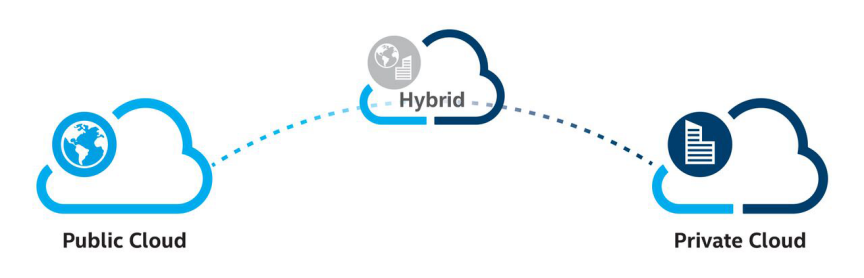

There are 3 cloud models that require an assessment to ascertain the best fit for the company:

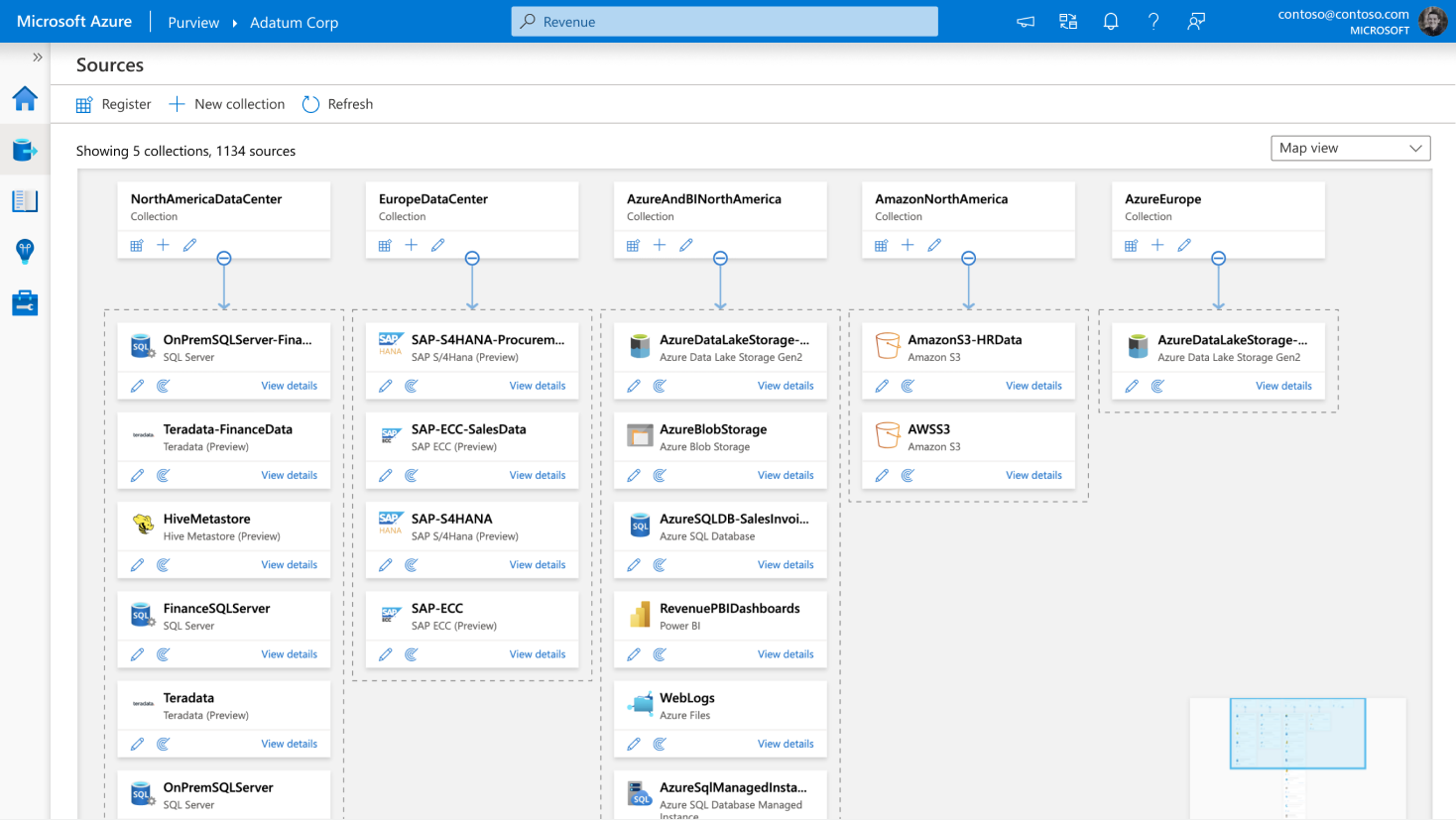

- Public Clouds are the most popular choice where a service provider owns and manages the entire platform stack of cloud resources – which are then shared to host a number of different clients. Some common examples of such managed service providers are MS Azure, AWS, and Google Cloud.

- Private Clouds, on the contrary, don’t share computing resources as they are set up specifically for exclusive use by a single organization. Compared to public clouds, such a framework offers more control over customized needs and is generally used by organizations who have distinct or specific requirements, including security, platform flexibility, enhanced service levels, etc.

- Hybrid Clouds are a blend of a public/private cloud used with an on-premises infrastructure. This allows an organization to interchange data and applications between both environments that suit its business process or technical requirements. For businesses that are already invested in on-site hardware, a Hybrid cloud model can ease a gradual transition to the cloud over a long-term period. Additionally, for businesses that are too reliant on Legacy applications, a Hybrid cloud model is often perceived as the model that provides the leeway to adopt new tools while continuing with traditional ones.

Apart from the cloud model, when it comes to selecting a service provider, there are key factors to consider such as

- how the data is secured,

- Agreed service levels and the provision to customize them,

- a guarantee of protection against network disruptions, and

- the costs involved.

It is important for an organization to be mindful of vendor lock-in terms, as once the transition starts with migrating data, it can be difficult and costly to switch providers.

What is recommended?

Plan exhaustively on analyzing the current and future architecture, security, and integration requirements. Be clear about the goals of migrating to the cloud and identify the vendors that will most likely help in achieving them. A best practice of choosing the preferred service provider often starts with evaluating the proposed Service Level Agreement (SLA) for maintenance commitments, access to support, and exit clauses that offer flexibility.

Engagement and Adoption from Stakeholders

When introducing changes within an organization, it is often met with resistance by multiple stakeholders, which can thwart efforts for a smooth switch. This can be explained by scenarios where – the finance department may oppose the transition because of cost, the IT team may feel their job security is threatened, or the end-users won’t understand the reason for the change and fear their services might get impacted. Though such resistances are usually short-term, such factors may often compromise an organization’s immediate goals unless stakeholders are onboard.

What is recommended?

Dealing with stakeholder resistance requires a holistic change in mindset across all levels of the organization. While hands-on training and guidance may provide support for users in adopting and using cloud-based services, preemptively addressing any resistance is a start on the right foot. Additionally, as an advisory for various organizational units, it is suggested to build a compelling business case that highlights current challenges in the organization with clear explanations on how migrating to the cloud will resolve these issues.

Security Compromise

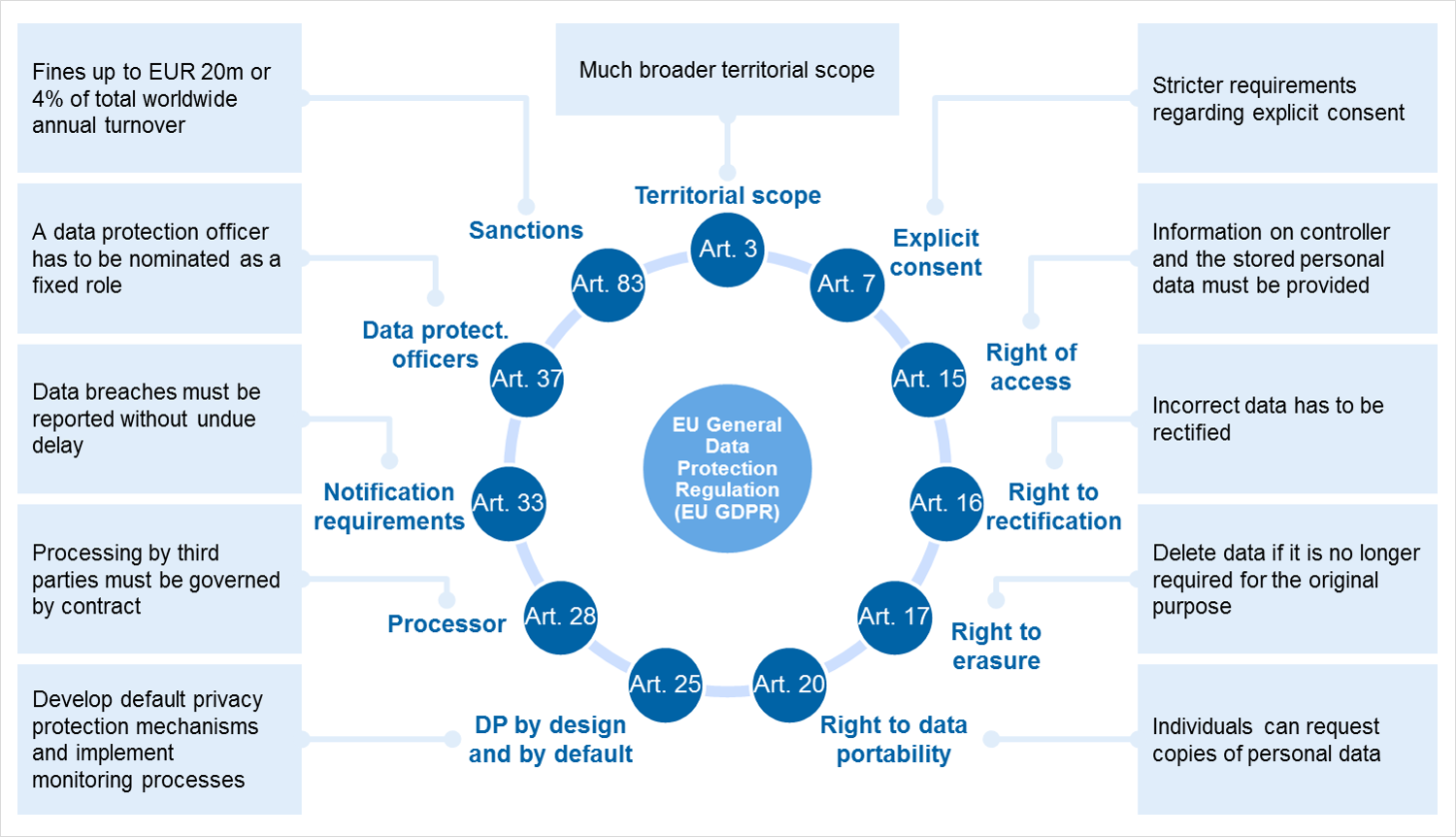

Whether the underlying architecture relies upon on-premises or the cloud, protecting a company’s data remains a top priority for any organization. When migrating to the cloud, a large part of the organization’s data security is managed by the cloud service provider. As a result, it is vital to have a thorough assessment of the vendor’s security protocols and practices.

This also means that organizations remain in control of where the data is stored, how incoming/outgoing data is encrypted, what measures are in place to ensure software is updated with the latest fixes, as well as the regulatory compliance status of the provider. Certain enterprise cloud providers like MS Azure take a holistic approach to security and offer the highest industry security standards that are aligned with regulations like PCI and HIPAA.

What is recommended?

Define in-house security policies and explore the available cloud platform’s security tools. As a result, it is critical to proactively consider:

- authorization & authentication,

- audit lifecycle,

- application and network firewalls,

- protection against DDoS attacks and other malicious cyberattacks.

Besides, a secure cloud migration strategy should administer how security is applied to data in-transit and at-rest, how user identities are protected, and how policies get enforced post-migration across multiple environments.

It is important to note that administering security across all layers and phases of implementation requires much more than using tools. This usually begins with:

- an organization to foster a security mindset,

- adopting security as part of the workflow by embracing a DevSecOps model,

- as well as incorporate a robust policy and audit governance through Security-as-Code or Policy-as-Code methodologies.

Avoid Service Disruptions

Legacy models that rely extensively on third-party tools which are through with sunset clauses, or in-house developed applications, require special provision for a smooth transition. More so, frameworks involving virtual machines that include hardware-level abstraction are practically more complex that syncs and maintains abstraction layers through pre and post-transition phases. Unplanned migrations for such setups may often lead to performance issues including increased latency, interoperability, unplanned outages, and intermittent service disruptions.

What is recommended?

Replicating virtual machines to the cloud should be planned based on an organization’s workload tolerance, as well as its on-prem networking setup. It is advised to make use of agent-based or agentless tools available by service providers, such as Azure Migrate that provide a specialized platform for seamless migrations.

As for legacy or sunset apps, organizations are advised to plan for Continuous Modernization that provisions regular auditing of such apps, while planning for a phased retirement in the longer term. For setups where an immediate Lift and Shift isn’t an option, the organization should recalibrate its migration strategy by considering Refactoring or Rearchitecting strategies, that reimplements the application architecture from scratch.

Cost Implications

Accounting for near and long-term costs during cloud migration is often overlooked. There are several factors that require consideration to avoid expensive and disruptive surprises. As migration from a legacy to the cloud is gradual, in the immediate term, organizational units often need to continue using both on-premises as well as the cloud infrastructure. This implies additional costs towards duplication of resource consumption such as – data sync & integrity, high-availability, backup & recovery, and maintenance of current systems.

What is recommended?

Over the longer term, using a cloud platform is more cost-effective. Though there is very little that can be done by an organization to avoid most of such expenses during migration, what is required is to include these within its financial projections. While doing so, expect there to be upfront costs related to the amount of data being transferred, the services being used, and added expenses that may arise from refactoring to ensure compatibility between existing solutions and the cloud architecture.

Benchmarking Workforce Skills

A migration plan that doesn’t benchmark workforce skills is often considered flawed. Cloud migrations can get complicated with customized requirements, using new technologies, and assessing what systems and data will be moved. During this, a good chunk of the effort goes towards the analysis of existing infrastructure to establish what will work on the cloud and identify the future gaps with respect to in-house workforce skills.

What is recommended?

Migrating to the cloud is a complex process that requires a unique set of soft and hard skills. Before transitioning, it is essential to understand what practical knowledge the team has with cloud platforms, and then take the necessary steps to upskill in relevant cloud technologies and security. An important consideration around this should also factor in the allocation of contingent funds towards setting up a consistent framework of skills upgrade for seamless adoption of emerging tools and practices.

Key Takeaways

Adopting a cloud framework today is more a necessity than a projected goal. While migrating to the cloud, an organization’s goal remains equally important to develop a migration strategy, that sets realistic expectations by undertaking thorough due diligence. Being aware of the challenges and how to address them, not only minimizes immediate risks, but also prevents the project from becoming a disaster in the longer run.

By the end of it all, the successful strategy determines how efficient the migration is, without a noticeable impact on productivity or operational efficiency.