Software quality is no longer just about finding bugs—it’s about predicting, preventing, and optimizing testing at an unprecedented scale. With the rise of Artificial Intelligence (AI) in Quality Assurance (QA), traditional testing methods are evolving into intelligent, self-improving systems that enhance efficiency and accuracy. As applications grow more complex and development cycles shrink, AI-driven solutions are transforming test case generation, defect prediction, and automation strategies, allowing QA teams to focus on higher-value tasks. From streamlining manual test case creation with AI-powered tools integrated into Jira and Azure DevOps to enhancing chatbot test automation with machine learning models and boosting test script development with GitHub Copilot, AI in software testing is redefining how teams ensure software quality. This blog explores how these innovations are revolutionizing QA, setting new industry benchmarks, and shaping the future of software testing.

Revolutionizing Manual Test Case Generation with AI

Manual test case creation has long been a tedious and error-prone process, often leading to inconsistencies and gaps in test coverage. AI-powered test case generation is transforming this traditional approach, enabling QA teams to automate test design, enhance accuracy, and improve overall efficiency in AI in software testing.

One such tool making significant strides is AI Test Case Generator, which leverages AI to analyze user stories and automatically generate structured test cases. Each test case includes a unique ID, title, description, detailed steps, expected results, and priority level, ensuring comprehensive test coverage. Unlike manual methods, AI-driven generation minimizes human error and ensures consistency across test scenarios.

A key advantage of AI test case generation is its seamless integration with platforms like Atlassian Jira and Microsoft Azure DevOps. This integration allows QA teams to directly transform user stories into executable test cases, maintaining a clear traceability link between requirements and testing. Additionally, teams can organize AI-generated test cases under structured suites, such as regression testing, making it easier to track execution progress and maintain test artifacts over time.

By automating the labor-intensive aspects of test case creation, QA teams can focus more on exploratory testing, refining complex scenarios, and improving test coverage. As organizations strive for faster release cycles and higher software quality, AI-powered test case generation is proving to be a game-changer, enabling faster, more accurate, and scalable QA processes.

Enhancing Chatbot Test Automation with Machine Learning

As chatbots become more integral to customer interactions, ensuring their accuracy and reliability is crucial. Traditional test automation methods struggle to validate chatbot responses due to the complexity of natural language processing. Machine learning-powered automation frameworks are revolutionizing chatbot testing by enabling intelligent, scalable, and repeatable validation of chatbot functionality.

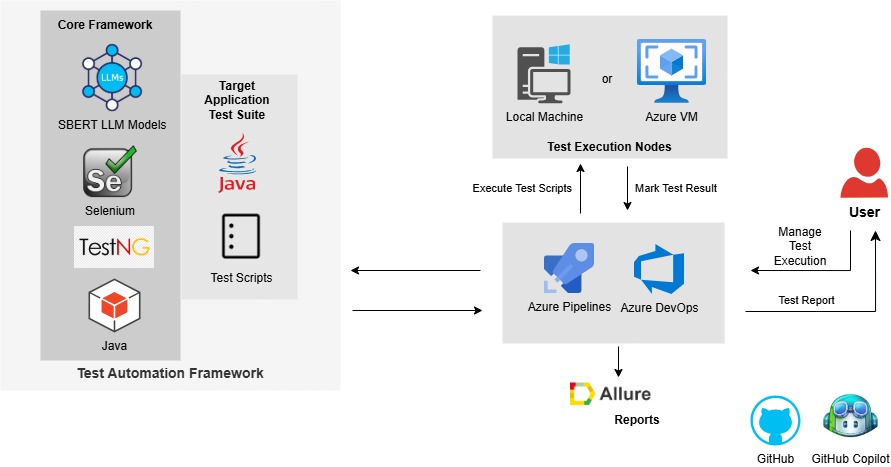

One of the key advancements in this space is the integration of SBERT (Sentence-BERT) within test automation frameworks. SBERT is a specialized machine learning model designed for natural language processing, which modifies the BERT (Bidirectional Encoder Representations from Transformers) architecture to generate semantically meaningful sentence embeddings using siamese and triplet network structures. This makes SBERT particularly effective for evaluating the semantic similarity between expected and actual chatbot responses. The model computes cosine similarity between sentence embeddings, enabling precise assertion validation and ensuring that the chatbot’s output aligns with expected results. This AI-driven approach significantly reduces manual effort, improves response evaluation, and enhances overall test reliability.

The framework is designed specifically for chatbot testing, enabling seamless validation of the chatbot’s response accuracy against predefined regression scenarios and ensuring consistency with expected responses while excluding conversational context. Additionally, the use of GitHub Copilot for intelligent code suggestions ensures high-quality test script development. By automating the functional regression testing of chatbots, QA teams can shift their focus toward exploratory testing and refining complex chatbot behaviours. This machine learning-powered test automation framework not only improves chatbot accuracy but also ensures a more efficient and scalable QA process for AI-driven applications.

Test Automation Architecture Diagram

Boosting Test Automation Efficiency with GitHub Copilot

AI-driven tools like GitHub Copilot are transforming how QA teams approach test automation, making script development faster, more efficient, and highly optimized in AI in software testing. Copilot provides context-aware code suggestions for writing and maintaining automated test scripts, reducing the manual effort required for repetitive coding tasks. By assisting in test scenario creation, test data generation and assertions, Copilot enables QA engineers to develop optimized and maintainable automation frameworks with minimal effort. Additionally, its ability to optimize functions and suggest efficient approaches ensures that test scripts are not only accurate but also performant, leading to higher-quality test automation solutions.

One of the most significant advantages of GitHub Copilot is its seamless integration with IDEs, allowing QA engineers to receive real-time code suggestions tailored to their test automation framework. The Copilot Chat IDE Plugin further enhances this capability by offering interactive AI-driven coding assistance, making debugging and refining test scripts more intuitive. However, while Copilot accelerates test script development, QA teams must validate AI-generated code to ensure accuracy and relevance, particularly in UI automation and unique test case generation, where AI suggestions may sometimes be generic. Despite these limitations, GitHub Copilot is revolutionizing QA automation, enabling teams to focus on high-value testing activities, improve efficiency, and enhance overall software quality in an increasingly AI-driven development landscape.

Conclusion

AI-driven advancements are reshaping the landscape of software testing, empowering QA teams to automate complex processes, improve efficiency, and enhance software quality. From AI-powered test case generation that ensures comprehensive coverage and traceability, to machine learning-driven chatbot automation that delivers precise validation of conversational AI, and GitHub Copilot-assisted test automation that streamlines script development—these innovations are revolutionizing traditional QA methodologies. By leveraging AI in test automation, organizations can reduce manual effort, accelerate testing cycles, and ensure more reliable software releases. As AI continues to evolve, its role in QA automation will only expand, making it essential for teams to embrace these intelligent solutions to stay ahead in an increasingly fast-paced development environment.

About the Author

Sarthak Seth is a results-driven Technical Lead in Software Testing with over 10 years of experience in designing and implementing QA strategies and solutions for diverse clients. With a strong foundation in test automation, quality engineering, and AI-driven testing, he excels at developing innovative processes that align with emerging trends and industry best practices.

Adept at leveraging AI and automation to enhance testing efficiency, he specializes in ensuring seamless quality assurance across modern cloud-based architectures. Passionate about innovation, he continues to redefine software quality engineering by integrating cutting-edge technologies into robust and scalable QA solutions.

Redefining The Future of QA: AI in Software Testing

AI, QASoftware quality is no longer just about finding bugs—it’s about predicting, preventing, and optimizing testing at an unprecedented scale. With the rise of Artificial Intelligence (AI) in Quality Assurance (QA), traditional testing methods are evolving into intelligent, self-improving systems that enhance efficiency and accuracy. As applications grow more complex and development cycles shrink, AI-driven solutions are transforming test case generation, defect prediction, and automation strategies, allowing QA teams to focus on higher-value tasks. From streamlining manual test case creation with AI-powered tools integrated into Jira and Azure DevOps to enhancing chatbot test automation with machine learning models and boosting test script development with GitHub Copilot, AI in software testing is redefining how teams ensure software quality. This blog explores how these innovations are revolutionizing QA, setting new industry benchmarks, and shaping the future of software testing.

Revolutionizing Manual Test Case Generation with AI

Manual test case creation has long been a tedious and error-prone process, often leading to inconsistencies and gaps in test coverage. AI-powered test case generation is transforming this traditional approach, enabling QA teams to automate test design, enhance accuracy, and improve overall efficiency in AI in software testing.

One such tool making significant strides is AI Test Case Generator, which leverages AI to analyze user stories and automatically generate structured test cases. Each test case includes a unique ID, title, description, detailed steps, expected results, and priority level, ensuring comprehensive test coverage. Unlike manual methods, AI-driven generation minimizes human error and ensures consistency across test scenarios.

A key advantage of AI test case generation is its seamless integration with platforms like Atlassian Jira and Microsoft Azure DevOps. This integration allows QA teams to directly transform user stories into executable test cases, maintaining a clear traceability link between requirements and testing. Additionally, teams can organize AI-generated test cases under structured suites, such as regression testing, making it easier to track execution progress and maintain test artifacts over time.

By automating the labor-intensive aspects of test case creation, QA teams can focus more on exploratory testing, refining complex scenarios, and improving test coverage. As organizations strive for faster release cycles and higher software quality, AI-powered test case generation is proving to be a game-changer, enabling faster, more accurate, and scalable QA processes.

Enhancing Chatbot Test Automation with Machine Learning

As chatbots become more integral to customer interactions, ensuring their accuracy and reliability is crucial. Traditional test automation methods struggle to validate chatbot responses due to the complexity of natural language processing. Machine learning-powered automation frameworks are revolutionizing chatbot testing by enabling intelligent, scalable, and repeatable validation of chatbot functionality.

One of the key advancements in this space is the integration of SBERT (Sentence-BERT) within test automation frameworks. SBERT is a specialized machine learning model designed for natural language processing, which modifies the BERT (Bidirectional Encoder Representations from Transformers) architecture to generate semantically meaningful sentence embeddings using siamese and triplet network structures. This makes SBERT particularly effective for evaluating the semantic similarity between expected and actual chatbot responses. The model computes cosine similarity between sentence embeddings, enabling precise assertion validation and ensuring that the chatbot’s output aligns with expected results. This AI-driven approach significantly reduces manual effort, improves response evaluation, and enhances overall test reliability.

The framework is designed specifically for chatbot testing, enabling seamless validation of the chatbot’s response accuracy against predefined regression scenarios and ensuring consistency with expected responses while excluding conversational context. Additionally, the use of GitHub Copilot for intelligent code suggestions ensures high-quality test script development. By automating the functional regression testing of chatbots, QA teams can shift their focus toward exploratory testing and refining complex chatbot behaviours. This machine learning-powered test automation framework not only improves chatbot accuracy but also ensures a more efficient and scalable QA process for AI-driven applications.

Test Automation Architecture Diagram

Boosting Test Automation Efficiency with GitHub Copilot

AI-driven tools like GitHub Copilot are transforming how QA teams approach test automation, making script development faster, more efficient, and highly optimized in AI in software testing. Copilot provides context-aware code suggestions for writing and maintaining automated test scripts, reducing the manual effort required for repetitive coding tasks. By assisting in test scenario creation, test data generation and assertions, Copilot enables QA engineers to develop optimized and maintainable automation frameworks with minimal effort. Additionally, its ability to optimize functions and suggest efficient approaches ensures that test scripts are not only accurate but also performant, leading to higher-quality test automation solutions.

One of the most significant advantages of GitHub Copilot is its seamless integration with IDEs, allowing QA engineers to receive real-time code suggestions tailored to their test automation framework. The Copilot Chat IDE Plugin further enhances this capability by offering interactive AI-driven coding assistance, making debugging and refining test scripts more intuitive. However, while Copilot accelerates test script development, QA teams must validate AI-generated code to ensure accuracy and relevance, particularly in UI automation and unique test case generation, where AI suggestions may sometimes be generic. Despite these limitations, GitHub Copilot is revolutionizing QA automation, enabling teams to focus on high-value testing activities, improve efficiency, and enhance overall software quality in an increasingly AI-driven development landscape.

Conclusion

AI-driven advancements are reshaping the landscape of software testing, empowering QA teams to automate complex processes, improve efficiency, and enhance software quality. From AI-powered test case generation that ensures comprehensive coverage and traceability, to machine learning-driven chatbot automation that delivers precise validation of conversational AI, and GitHub Copilot-assisted test automation that streamlines script development—these innovations are revolutionizing traditional QA methodologies. By leveraging AI in test automation, organizations can reduce manual effort, accelerate testing cycles, and ensure more reliable software releases. As AI continues to evolve, its role in QA automation will only expand, making it essential for teams to embrace these intelligent solutions to stay ahead in an increasingly fast-paced development environment.

About the Author

Sarthak Seth is a results-driven Technical Lead in Software Testing with over 10 years of experience in designing and implementing QA strategies and solutions for diverse clients. With a strong foundation in test automation, quality engineering, and AI-driven testing, he excels at developing innovative processes that align with emerging trends and industry best practices.

Unlocking Developer Productivity with GitHub Copilot: The Future of AI-Assisted Coding

Application Development, Azure OpenAIGitHub Copilot has become a game-changer for developers and organizations. Providing AI-driven code suggestions, explanations, and chat-based support, it empowers developers to work faster, smarter, and with greater accuracy.

What is GitHub Copilot?

GitHub Copilot is an AI-powered coding assistant developed by GitHub and OpenAI. Powered by GPT-3, it provides code suggestions in real-time as developers type, helping them write code more efficiently.

It is like having a pair of virtual hands working alongside you to handle routine tasks, making it an invaluable tool for both experienced developers and beginners. It enhances the developer experience at every stage of the software development lifecycle. It integrates into IDEs, GitHub.com, and command-line interfaces to offer code completions, chat support, and context-aware recommendations.

GitHub Copilot has three primary versions:

How GitHub Copilot Transforms Development

1. Talent Retention and Job Satisfaction

Developers want modern tools that reduce mundane tasks. By automating repetitive coding and simplifying the onboarding process for new hires, Copilot makes developers’ lives easier. Happier developers tend to stay longer, reducing hiring costs.

2. Speed and Efficiency Boost

GitHub Copilot enhances efficiency in several ways:

These capabilities significantly reduce the time spent on “code toil,” so developers can focus on innovation and problem-solving.

3. Improving Code Quality and Security

GitHub Copilot prioritizes quality and security by:

How GitHub Copilot Works

Diagram showing how the code editor connects to a proxy which connects to the GitHub Copilot LLM. Image Source: GitHub

Data Pipeline

When a developer interacts with Copilot, it collects context (like open files and highlighted code) and builds a prompt. This prompt is sent to a secure Large Language Model (LLM) that processes it and returns suggested completions.

Safeguards and Filters

Before suggestions are presented, they undergo checks for quality, toxicity, and relevance. If any issues are found—like the presence of unique identifiers or security vulnerabilities—the suggestions are discarded.

User Control

Developers have control over which suggestions to accept, and they can enable filters to prevent Copilot from generating code that matches public repositories. This option strengthens code originality and reduces licensing risks.

Measuring Copilot’s Impact on Your Organization

GitHub suggests a four-stage process for evaluating Copilot’s return on investment (ROI):

Did you know? Organizations report up to a 55% reduction in coding time when using GitHub Copilot!

Governance, Policy, and Compliance

Organizations should create an AI policy framework to ensure the proper use of Copilot. A good policy should address:

How to Roll Out GitHub Copilot

To maximize Copilot’s impact, GitHub recommends a six-step rollout strategy:

Is GitHub Copilot a Threat to Developers?

There has been some concern in the developer community regarding AI tools like GitHub Copilot replacing human developers. However, GitHub Copilot is not intended to replace developers but to complement their skills and workflows, it is truly a “CoPilot”

It is a productivity tool that helps developers code faster, more efficiently, and more accurately. Copilot automates repetitive tasks but still relies on human developers for critical thinking, creativity, and problem-solving.

Conclusion

GitHub Copilot is a game-changing tool for developers. It enhances productivity and provides smart code suggestions powered by artificial intelligence. By automating mundane tasks and providing intelligent code suggestions, Copilot frees developers to focus on more complex aspects of their projects. Whether you are a seasoned pro or a beginner, GitHub Copilot can help you write better code faster.

As the tool continues to evolve, we can expect even greater enhancements in AI-assisted coding, making it an essential part of modern development workflows.

Embrace the future of coding with GitHub Copilot and experience a new level of productivity and creativity.

Start your GitHub Copilot journey today and see the transformation firsthand.

About the Author

Naveen Pratap Singh is a results-driven project/program management professional with over 10 years at Optimus Information. Known for transforming complex challenges into actionable solutions, he specializes in capacity planning, risk mitigation, and delivering high-impact software solutions across technologies like Azure, AWS, .NET, and DevOps.

From Development to Production: A CTO’s Guide to Building a Chatbot

Azure OpenAIStep 1: Identifying the Use Case

Building a chatbot begins with a well-defined use case that addresses a tangible business need. Key questions to consider:

Common use cases include:

Identifying a clear use case ensures resource alignment and sets the stage for measurable outcomes.

Step 2: Selecting the Right Technology Stack

Technology selection is critical to ensure the chatbot’s functionality, scalability, and long-term maintainability. Azure offers a robust suite of tools to streamline chatbot development:

As a CTO, ensure the chosen stack aligns with your organization’s existing ecosystem to avoid unnecessary complexity.

Schematic showing RAG using Azure AI Search

Frameworks for Building a Chatbot with OpenAI

Advanced AI models like OpenAI’s GPT have unlocked new possibilities in building a chatbot and simplifying chatbot development. Several frameworks simplify their adoption within Azure’s ecosystem:

These frameworks, coupled with Azure’s scalability, make it easier to build intelligent, production-ready bots.

Step 3: Designing an Optimal Conversation Flow

User experience should drive the design process. Key focus areas include:

Azure Cognitive Services can help fine-tune conversation flows by enabling language understanding and sentiment analysis.

Step 4: Development and Testing

Development

Testing

As a CTO, prioritize iterative development with regular feedback loops to minimize risks.

Step 5: Productionizing the Chatbot

Transitioning to production involves ensuring the chatbot is enterprise-ready:

Performance Optimization

Monitoring and Analytics

Compliance and Security

Continuous Improvement

Step 6: Measuring Success

Define and track KPIs to assess the chatbot’s business impact:

Conclusion

From identifying the use case to deploying a production-grade chatbot, building a chatbot is a journey that is complex yet rewarding. Azure’s comprehensive toolset—including RAG, Cognitive Search, Blob Storage, Cosmos DB, and Function Calling—positions businesses to deliver robust, scalable, and intelligent chatbot solutions. As a CTO, your role is to align technical capabilities with strategic objectives, ensuring the chatbot delivers tangible value while being secure and adaptable. Remember, a successful chatbot evolves through continuous learning and adaptation—a reflection of your organization’s commitment to innovation.

About the Author

Khushbu Garg is an experienced Azure Solution Architect with over 13 years at Optimus Information. She has a proven track record in the information technology and services industry, specializing in Generative AI, OpenAI, and Azure cloud computing. With expertise in SQL and .NET development, Khushbu has successfully led complex projects leveraging cloud technologies. A Bachelor of Technology (BTech) graduate in Computer Science from Uttar Pradesh Technical University, she is passionate about delivering innovative solutions that drive business success.

The Business and Technical Advantages of Event-Driven Architecture

App ModernizationEvent-driven architecture (EDA) is rapidly becoming a critical component for modern digital transformation initiatives. By focusing on the flow of events within an organization, EDA enables systems and teams to operate with greater efficiency, flexibility, and responsiveness. In this article, we explore what is Event-Driven Architecture, the main advantages of adopting an event-driven architecture, and why it’s a key enabler for both technological and organizational transformation.

What is Event-Driven Architecture (EDA)?

Event-driven architecture (EDA) is a design paradigm that focuses on the production, detection, consumption, and reaction to events. In EDA, events are changes in state—such as a new customer sign-up, an order being placed, or an inventory update—that trigger reactions or actions within the system. Unlike traditional architectures, where systems might communicate in a tightly coupled, synchronous manner, EDA relies on loosely coupled components that communicate asynchronously.

This approach allows systems to react to changes asynchronously, resulting in greater flexibility, scalability, and resilience. Other common architectural styles include:

Example of e-commerce event-drive architecture.

Why Event-Driven Architecture?

Now that we understand what Event-Driven Architecture is, let’s explore why it is so beneficial for modern businesses and digital transformation.

EDA reduces the time required to release new features by decoupling services and allowing them to evolve independently. This reduces dependencies and enables quicker deployments.

By decoupling components, EDA ensures that services can scale independently, reducing the impact of changes or failures. Systems can grow organically, adding new services or removing them without affecting the entire application.

EDA’s decoupled nature also extends to organizational structures. Development squads can work on their areas of expertise without waiting on other teams, fostering greater efficiency, faster development cycles, and enhanced innovation.

EDA is a powerful enabler for digital transformation. Its loosely coupled nature makes it easier for even non-technical departments to integrate with the system, empowering business users to automate workflows and processes with minimal disruption to ongoing operations.

Replacing traditional architectures with event-driven models often results in significant cost savings. In one recent project, we replaced a legacy integration platform with Azure Integration Services, which reduced the client’s operational costs by 90%, while maintaining better performance and scalability.

Conclusion

Event-driven architecture is an optimal choice for businesses seeking agility, scalability, and efficiency in a fast-moving digital environment. It enables faster time-to-market for new solutions, simplifies system integration through decoupling, and supports the autonomy of development teams. Furthermore, it allows businesses to harness digital transformation opportunities by integrating various departments with minimal impact.

As seen in a recent project, this architecture can also dramatically reduce operational costs while maintaining high performance. As companies evolve and their technology needs grow, Event-Driven Architecture stands out as a resilient, cost-effective approach to modern system design, delivering measurable results and long-term value.

About the Author

Lucas Massena is an experienced Cloud Solutions Architect with over 20 years of expertise in .NET and event-driven architecture. Based in Brazil, Lucas currently leads cloud modernization and cost optimization projects for Optimus Information, a Canadian firm serving global clients.

With a unique blend of technical proficiency and strategic business insight, Lucas excels at translating complex business challenges into scalable, cost-effective technology solutions. A seasoned Microsoft speaker, he is passionate about digital transformation, cloud technologies, and creating measurable results that fuel business growth and success.

Unlocking the Power of Microsoft Fabric: A Unified Data Platform for the Future

Microsoft FabricIn today’s fast-paced digital world, businesses need robust and integrated solutions to manage and analyze their data effectively. Enter Microsoft Fabric, a unified data platform that brings together various Microsoft tools and services to provide comprehensive solutions for data analysis, integration, and governance.

What is Microsoft Fabric?

Microsoft Fabric is designed to streamline data operations by combining real-time processing capabilities, storage, data integration, and business intelligence. This powerful platform facilitates the work of developers, data analysts, and IT managers, enabling them to consolidate their data operations within a highly scalable cloud environment.

Key Workloads for Microsoft Fabric

Microsoft Fabric offers a range of workloads tailored to meet diverse business needs:

These workloads make Microsoft Fabric a versatile platform for digital transformation and data analysis.

Why Choose Microsoft Fabric?

Organizations looking for a comprehensive and efficient platform to manage and analyze their data will find Microsoft Fabric to be a strategic choice. Here are some key reasons to choose Microsoft Fabric:

The Role of Copilot in Microsoft Fabric

Copilot in Microsoft Fabric is an innovative tool that uses artificial intelligence to assist users in their data-related activities. It provides contextual suggestions and task automation, optimizing workflow and increasing efficiency. Integrated into various features of Microsoft Fabric, such as Power BI and Synapse Data Science, Copilot allows users to interact through a chat interface, guiding their decisions and tasks.

Conclusion

Adopting Microsoft Fabric represents a significant transformation for organizations seeking to optimize their data operations and improve decision-making. With its unified platform, companies can achieve flexible scalability, reduce operational costs, and enjoy simplified maintenance.

Optimus Information is uniquely positioned to empower your organization to implement and utilize Microsoft Fabric efficiently. With our expertise in cloud solutions and data management, we provide tailored support throughout the entire deployment process, ensuring a seamless integration of Microsoft Fabric into your existing infrastructure.

By partnering with Optimus, you gain access to cutting-edge technology and best practices that drive innovation, foster collaboration, and ultimately lead to informed decision-making that propels your organization forward. Embrace the future of data management with Optimus and unlock the transformative benefits of Microsoft Fabric!

About the Author

Luis Rodrigues is a Solution Architect at Optimus Information specializing in crafting cloud architectures that align with client’s business goals. His expertise lies in driving cloud adoption, from initial pilots and proofs of concept to resolving technical roadblocks. Luis is passionate about delivering customer-centric solutions, focusing on data, DevOps, AI, and low-code opportunities. With a focus on performance, security, scalability, and reliability, he ensures that cloud solutions meet the highest standards.

5 Signs It’s Time to Migrate Your Applications to the Cloud

Cloud MigrationThe cloud has revolutionized how businesses operate. Cloud computing offers a robust, scalable, and cost-effective way to store and access data and applications. Gone are the days of bulky servers and software licenses; the cloud provides on-demand resources that adapt to your needs. But how do you know if it’s time for your business to make the leap and migrate your applications to the cloud? Here are 5 signs that point towards a cloud-based future:

1. Struggling with Scalability:

Imagine this: your business is booming, but your on-premise infrastructure can’t keep up. Scaling up with traditional methods involves buying more hardware and software, a costly and time-consuming process. Cloud computing offers a solution. With cloud-based applications, you can easily add or remove resources as needed. Need more processing power for a busy season? No problem! The cloud scales elastically, allowing you to adapt to changing demands without breaking the bank. For instance, a clothing retailer saw a surge in online orders during the holidays. By migrating their e-commerce platform to the cloud, they were able to seamlessly scale their infrastructure to handle the increased traffic, resulting in a significant boost in sales.

2. High Maintenance Costs and Outdated Infrastructure:

Maintaining legacy hardware and software can be a financial drain. Constant patching, updates, and hardware upgrades not only require money but also consume valuable IT resources. Cloud computing eliminates these burdens. You don’t need to invest in expensive hardware upfront; instead, you only pay for the resources you use. Cloud providers handle maintenance and updates, freeing up your IT team to focus on strategic initiatives. Imagine the cost savings and the increased productivity your IT team could achieve by migrating to the cloud!

Learn more in this blog: Legacy Systems: The Risks of Postponing Application Modernization

3. Security Concerns and Outdated Security Measures:

Cybersecurity threats are ever-evolving, and outdated security systems leave businesses vulnerable. Cloud providers invest heavily in advanced security measures, including firewalls, intrusion detection systems, and data encryption. Additionally, cloud platforms offer automatic updates and disaster recovery solutions, ensuring your data remains protected and accessible even in the event of a security breach.

4. Lack of Collaboration and Accessibility:

On-premise applications often limit collaboration – especially for geographically dispersed teams. Cloud-based applications, on the other hand, enable real-time collaboration and access from anywhere with an internet connection. This fosters improved communication and teamwork, potentially leading to increased employee productivity and better customer service.

5. Slow Innovation and Integration Challenges:

Legacy systems can be rigid and difficult to integrate with new technologies. Cloud platforms offer a wider range of tools and services that can be easily integrated with your existing applications. This opens doors for innovation and allows you to leverage cutting-edge technologies without the limitations of outdated systems.

Is it Time to Migrate?

If you’re experiencing any of these signs, you should consider if it’s time to migrate your applications to the cloud. Evaluate your current infrastructure and application landscape to determine the best approach for your business. Numerous resources are available to help you navigate the cloud migration process. Consider contacting a cloud migration specialist like Optimus Information to discuss your specific needs and develop a tailored plan. By taking the leap to the cloud, you can unlock a world of benefits and empower your business for future success.

Debunking the Myths: What Cloud Migration Isn’t (But Should Be)

Cloud MigrationCloud migration has become a hot topic for businesses of all sizes. The promise of scalability, agility, and cost savings is undeniable. However, many misconceptions and myths still surround this process. Let’s debunk these myths and shed light on what cloud migration truly is and what it should be.

Myth #1: Cloud Migration is a “Lift-and-Shift” Only Approach

Imagine simply picking up your entire server room and plopping it down in a virtual data center. That’s the idea behind a lift-and-shift approach to cloud migration. While it might seem efficient, it doesn’t leverage the full potential of the cloud. A true cloud migration should be an opportunity for optimization. By re-architecting applications to be cloud-native, you can take advantage of features like auto-scaling and serverless computing, leading to improved performance and cost savings.

Imagine this: you pack up your entire house and simply dump everything in your new apartment – furniture, clothes, even the kitchen sink. That’s essentially the “lift-and-shift” approach to cloud migration. While it seems like a quick fix, it doesn’t leverage the full potential of the cloud.

A successful cloud migration should be more like a well-planned move. You might decide to sell some furniture, donate old clothes, and invest in new appliances for your modern kitchen. Similarly, a cloud-native approach involves re-architecting your applications to take advantage of cloud functionalities like scalability and on-demand resources. This optimization can lead to significant performance improvements and cost savings.

Myth #2: Cloud Migration is Expensive and Complex

The fear of upfront costs and migration complexity is a common deterrent. However, the reality is that cloud migration can be cost-effective in the long run. On-premise infrastructure requires constant investment in hardware, software licenses, and maintenance. Cloud computing offers a pay-as-you-go model, eliminating upfront costs and allowing you to scale resources based on your needs. Additionally, numerous tools and services are available to streamline the migration process, minimizing downtime and disruption.

Myth #3: Security is Less Robust in the Cloud

Many businesses cling to the belief that their data is safer locked away in their own data centers. However, cloud providers invest heavily in advanced security measures, including firewalls, intrusion detection systems, and data encryption. Furthermore, cloud platforms offer automatic updates and disaster recovery solutions, ensuring your data remains protected and accessible even in the event of a security breach. The key lies in choosing a reputable cloud provider with a proven track record of security.

Read more here: 5 Cloud Security Best Practices

Myth #4: Cloud Migration Leads to Data Loss or Vendor Lock-in

Your data remains yours in the cloud. Cloud providers offer robust data ownership and control mechanisms. Additionally, cloud migration tools can facilitate easy data transfer between providers, mitigating the risk of vendor lock-in. For businesses with specific data regulations, compliance considerations during cloud migration are crucial. However, most reputable cloud providers offer solutions and support to ensure adherence to data privacy regulations.

What Cloud Migration Should Be

Cloud migration should be more than just a technical exercise. It’s a strategic business move with the potential to transform your organization. Here’s how:

To learn more, check out our blog: Common Cloud Adoption Missteps during the Strategy & Planning Phase

Moving Forward

Cloud migration offers a wealth of benefits for businesses of all sizes. By debunking the myths and approaching migration with the right mindset and planning, you can leverage the cloud to achieve greater agility, scalability, and innovation.

Conclusion

Cloud migration is more than just a technical exercise; it’s a strategic move with the potential to transform your business. By debunking the myths and approaching migration with the right mindset and planning, you can unlock the true potential of the cloud and achieve significant benefits for your organization.

Ready to start your migration? Schedule a quick call to discuss your needs.

Optimus Information Renews Migrate Enterprise Apps to Microsoft Azure Specialization

Press ReleasesVANCOUVER, BRITISH COLUMBIA, CANADA, June 10, 2023/EINPresswire.com/ — Optimus Information Inc. announced it has renewed their Migrate Enterprise Apps to Microsoft Azure Specialization, a validation of a solution partner’s deep knowledge, experience, and success in planning and migrating their customer’s applications to Azure. Microsoft Partners with this specialization demonstrates expertise in migrating and deploying production web application workloads, applying DevOps, and managing app services in Azure.

This specialization distinguishes channel partners that have met the stringent criteria around customer success and staff development and training, as well as passing a third-party audit of their migration practices earning them this Azure specialization. This achievement underscores Optimus Information’s commitment to delivering best-in-class cloud solutions that empower businesses to leverage the full potential of Azure.

Migrating enterprise applications to the cloud offers a wealth of benefits, but it can also be a complex undertaking. Organizations are increasingly looking to leverage the agility, scalability, and cost-efficiency of cloud platforms like Azure. However, top concerns include security, downtime during migration, and ensuring a smooth transition for users. Optimus Information, with their renewed Azure Migrate Enterprise Apps to Microsoft Azure Specialization, helps organizations navigate these challenges and unlock the full potential of the cloud.

“Renewing our Azure Migrate Enterprise Apps to Azure Specialization signifies our ongoing dedication to staying at the forefront of cloud migration technologies,” said Pankaj Agarwal, Founder and Managing Partner at Optimus Information. “This recognition empowers us to provide our clients with unparalleled expertise in modernizing their applications and unlocking the agility, scalability, and cost-efficiency benefits of Azure.”

Beyond their renewed Azure application migration expertise, Optimus Information boasts a comprehensive suite of cloud capabilities. They hold an additional Advanced Specialization for Infra and Database Migration to Microsoft Azure. Furthermore, they are a designated Microsoft solution partner across key areas like Infrastructure, Digital and App Innovation, and Data & AI.

To learn more about Optimus cloud services and how to work together in transforming your business, please visit optimusinfo.com

About Optimus Information Inc.

Optimus Information is a premier Microsoft Cloud Partner offering a comprehensive suite of cloud solutions and services to businesses across Canada and US. With a team of highly skilled professionals and a client-centric approach, Optimus delivers innovative and tailored cloud solutions that drive digital transformation, enhance productivity, and empower organizations to achieve their strategic goals.

Azure OpenAI: A Strategic Investment for Business Growth

Azure OpenAIIn today’s rapidly evolving business landscape, innovation is no longer a luxury; it’s a necessity. Companies are constantly seeking ways to streamline operations, optimize decision-making, and gain a competitive edge. This is where Artificial Intelligence (AI) steps in, offering a transformative potential to unlock new possibilities across industries.

Microsoft Azure, a leading cloud computing platform, has taken a significant leap forward in the realm of AI by partnering with OpenAI, a research and development company focused on creating safe and beneficial artificial general intelligence (AGI). This collaboration has resulted in Azure OpenAI, a suite of powerful AI services readily available for businesses of all sizes.

This blog will delve into the strategic advantages of leveraging Azure OpenAI, exploring how it can fuel business growth and empower organizations to achieve their full potential.

Unveiling the Power of Azure OpenAI

Azure OpenAI provides businesses with access to cutting-edge AI models pre-trained on massive datasets. These models can be readily integrated into existing workflows or utilized to develop entirely new applications. Here’s a glimpse into some of the core capabilities offered by Azure OpenAI:

These are just a few examples, and the potential applications of Azure OpenAI are constantly expanding. The key lies in understanding how these capabilities can be tailored to address your specific business challenges and opportunities.

The Strategic Advantage of Azure OpenAI

Beyond the functionalities offered, Azure OpenAI provides businesses with strategic advantages over traditional AI development approaches:

Unlocking Business Growth with Azure OpenAI

By integrating Azure OpenAI into your operations, businesses can unlock a multitude of growth opportunities:

These benefits are not merely theoretical; companies across various industries are already reaping tangible rewards through Azure OpenAI.

Real-World Examples of Success:

These are just a few examples, and the possibilities are truly limitless. As AI technology continues to evolve, businesses that embrace Azure OpenAI will be well-positioned to unlock new levels of innovation and achieve sustainable growth.

Getting Started with Azure OpenAI

Microsoft is committed to making AI accessible for all businesses. Azure OpenAI offers a free trial, allowing you to experiment with different functionalities and discover its potential for your organization. Additionally, Microsoft provides a wealth of resources, including documentation, tutorials, and sample code, to help you get started on your AI journey.

Here are some key steps to consider when exploring Azure OpenAI:

By leveraging these resources and the expertise of Microsoft partners like Optimus Information, businesses can seamlessly integrate Azure OpenAI into their existing workflows and unlock the transformative power of AI.

Connect with us if you want to develop an Azure OpenAI POC.

The Power of Preparation: How Azure Migration Assessments Optimize Your Cloud Investment

Cloud MigrationIn today’s digital landscape, cloud migration has become an essential step for businesses seeking scalability, agility, and cost-effectiveness. However, jumping headfirst into the cloud without proper planning can lead to unexpected challenges and missed opportunities. This is where Azure Migration Assessments emerge as a critical tool for optimizing your cloud investment.

Why Preparation Matters: Avoiding the Pitfalls of Unplanned Migration

Migrating to the cloud is a strategic move, not just a technological one. Without a clear understanding of your existing infrastructure, application dependencies, and security posture, the migration process can become a costly and time-consuming endeavor. Here’s how an unplanned migration can go wrong:

Azure Migration Assessment: Your Roadmap to a Smooth Cloud Journey

An Azure Migration Assessment acts as a comprehensive pre-flight check, providing valuable insights into your existing environment and paving the way for a smooth and successful migration to Azure.

Here’s what a typical Azure Migration Assessment entails:

Benefits of a Comprehensive Azure Migration Assessment

Investing in an Azure Migration Assessment before embarking on your cloud journey offers a multitude of benefits:

Beyond the Assessment: Ongoing Optimization in the Cloud

A successful cloud migration is not a one-time event. Continuous monitoring and optimization are crucial for maintaining peak performance and cost-effectiveness. Here’s how to leverage Azure tools for ongoing optimization:

Conclusion: Investing in Preparation Pays Off

By embracing Azure Migration Assessments as a critical first step, you gain the power of preparation for your cloud journey. This comprehensive evaluation helps you navigate the complexities of migration, optimize your cloud investment, and pave the way for a successful and sustainable cloud environment. Remember, the cloud is a powerful tool, and a well-planned migration unlocks its full potential to drive innovation, agility, and growth for your business.

Are you considering migrating to the Cloud but unsure where to start? Schedule a quick call to discuss your specific needs and see if you are eligible for a complimentary assessment.