Are you on the verge of starting your cloud migration journey? Here are a few tips to help guide you through the process.

1. Consider refactoring monolithic applications

Decomposing monolithic applications into services or microservices before moving to the cloud could bring a better return on investment than just using the cloud as another application co-location solution.

A monolithic application is a software system composed of a single, cohesive unit of code, normally self-contained and independent from other systems or applications. While moving to the cloud, this kind of application is possible, but it’s important to note that planning is critical as migration should be known to be an involved process.

It’s recommended to evaluate the specific needs of the application and infrastructure before deciding to move to the cloud and work with an experienced team of architects and developers who can help plan and execute the migration.

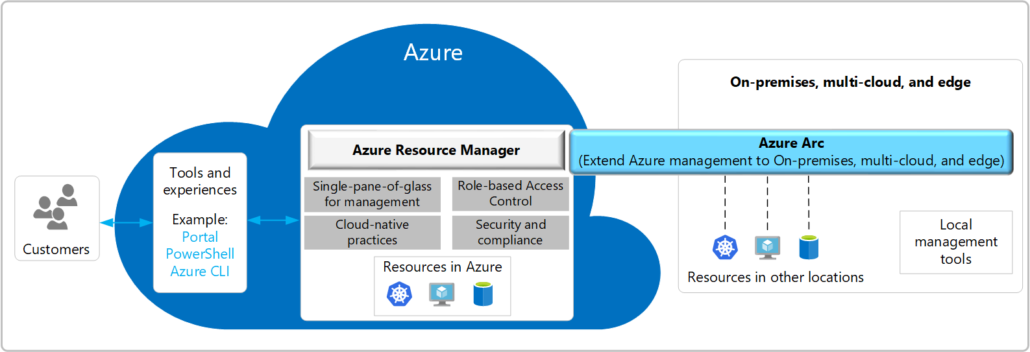

2. Consider a hybrid model

Hybrid models are a mix of on-premises and cloud technologies that can be best suited for legacy systems too complex for complete cloud migration.

We believe that all larger organizations will at least move toward a hybrid model if they choose not to migrate completely after evaluation and that smaller organizations will follow suit.

Still, this migration can be complex given the relation between systems. It is easier to move an on-premises system to the cloud completely than partially since the integration planning needs to consider the interrelations between on-premises and the cloud in the system to preserve its security, integrity, and availability, but hybrid models do and will work.

3. Evaluation and planning

Cloud migration requires planning and adequate resourcing. Every step of the process needs to be determined carefully. A well-mapped starting point will save headaches and smooth the entire process.

Companies must recognize that not every company has all the ‘teams’ ready to move from a traditional model to a cloud one.” The cloud has many roles and disciplines to consider: Architects, DevOps, Security, Networking, and Finance. These may not be typical roles in traditional companies, especially at the start-up stage.

You can choose between developing the right individuals within the organization or collaborating with a partner to obtain these abilities. Cloud migration is difficult to accomplish alone, especially a cloud project following the industry’s best practices and blueprints that have already been proven to work. As an organization, you can start alone and take the first steps in the cloud, but to ensure that it grows organically and is secure and highly available, you will require external guidance.

You will also need to acknowledge data protection and regulations best practices, among the most common motives preventing companies from moving to the cloud. Storing data in the cloud can be more secure than doing so in an on-premises system, BUT…you need to learn how to secure your data in the cloud and how contracts with cloud providers work. For example, regulators may tell you your company data can’t be stored outside the country.

4. Utilize already-validated designs

Minimize your efforts and maximize the vast cloud migration and implementation resources.

Using models and best practices validated by the industry will speed up the cloud migration process. Don’t lose time creating what is already available and what’s been tried and tested in the cloud; there are many resources you can utilize. Large cloud providers like Microsoft and Amazon already have blueprints for most cloud workloads.

5. Doing it at the right time

The right time for cloud migration is when it is the right time for your organization; you have the time and the resources. This will all depend on your individual business circumstances.

When considering cloud migration, organizations should not waste time analyzing how the cloud can help their business. The truth is that your competitors are planning to or have already moved to the cloud.

Signs that it is the right time to move to the cloud:

- When building a new software product

- When you need to keep an application or applications up to date

- When making a large data center investment

- Before renewing with a data center third-party service

Above all, scalability is the most incredible tool made available by the cloud. Cloud-enabled companies thrive on scalability: becoming able to process large quantities of data through machine learning and analytics can be highly challenging with on-premises resources. The cloud democratizes having access to tools too complex such as AI and machine learning to analyze tons of data.

Are you ready to start your cloud journey? For more information as you plan your migration to the cloud, please contact us.