Introduction

These days, more and more companies are opting for digital transformation. As a result, there is a tectonic shift toward frameworks and tools that create efficiency and improve the bottom line. Businesses today realize cloud’s benefits that enable economies of scale by removing redundant tasks and reducing operating costs.

However, it is always easier said than done. In one of our earlier articles, we discussed how migrating from an on-prem environment to the cloud requires a systematic well-thought-out plan. This article outlines six common cloud migration strategies while providing insights on key considerations and factors to help choose the right one.

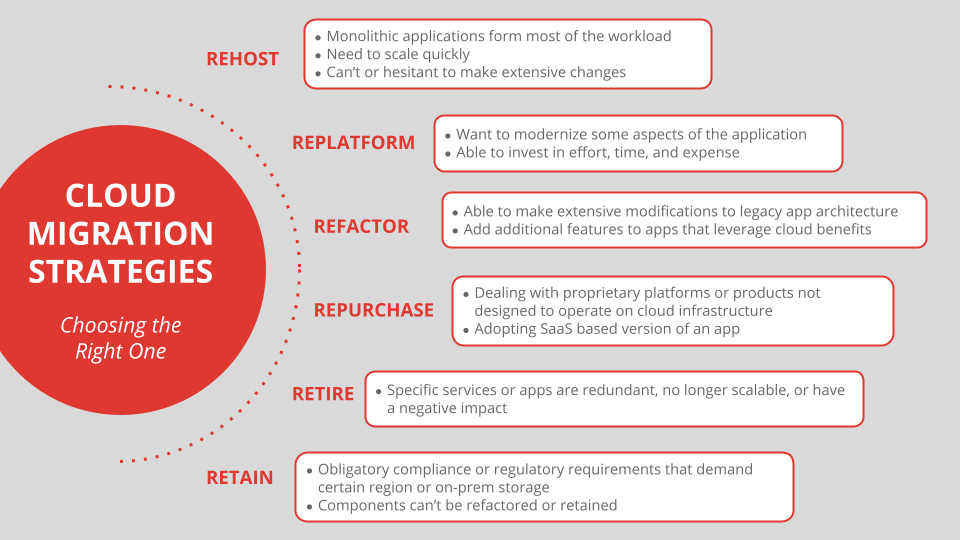

Common Cloud Migration Strategies

1. Rehosting

Typically referred to as the lift-and shift technique, a Rehosting strategy involves migrating applications partly or fully from an on-premises setup to a cloud-based infrastructure without the need to redesign the application architecture.

Strategic Purpose

Rehosting helps companies get up and running quickly without the need to make extensive changes.

When to choose this strategy

Rehosting is often used where monolithic applications form a considerable part of the entire workload. Besides, a key factor in choosing this migration strategy is specifically when there is a need to scale the migration as fast as possible with minimum business disruptions. For organizations that are hesitant and are experimenting with cloud capabilities without getting into long-term plans, Rehosting is recommended as the justified approach. Additionally, for organizations that prefer to use a blended model of both on-prem and cloud, Rehosting turns out to be a sensible choice.

2. Replatforming

Often known as the lift-tinker-and-shift approach, a Replatforming migration strategy is a variation of the Rehosting approach that optimizes workloads and applications before moving them to the cloud.

Strategic Purpose

Replatforming enables companies to upversion applications while retaining the core application architecture. Where upversioning involves rewriting application codes as necessary changes that optimize its usage in the cloud. A typical example of this is migrating database servers from on-prem to a cloud-based Database-as-a-Service offering. In this case, to support a DBaaS model, the database requires application codes to be rewritten, however, its underlying business logic and core architecture are retained by following a Replatforming strategy.

When to Choose this Strategy

Chosen by organizations who want to modernize some aspects of their applications to take advantage of cloud benefits like scalability and elasticity. However, organizations must be mindful of the considerable effort, time, and money that is incurred as part of the migration.

3. Refactoring

Refactoring is a better-fit strategic approach that involves making extensive modifications to the legacy application architecture and a large portion of its codebase for an optimum fit into the cloud environment.

Strategic Purpose

Refactoring aims to improve the existing application and implement features that could be difficult to achieve with the current way the application is structured. Rewriting the existing application code also helps organizations enable better resource utilization for workloads that would otherwise be expensive to rehost in the cloud.

When to Choose this Strategy

Organizations that want to migrate legacy applications can take advantage of the higher cost benefits this migration approach provides. It is also suitable for businesses that want to add additional features to their applications that leverage niche cloud utilities that improve performance to meet business requirements. Adopting cloud services such as Serverless Computing, High-Performance Data Lakes, etc. are some of the commonly known factors to choosing this model.

4. Repurchasing

Known as the drop-end-shop approach, the Repurchasing migration strategy involves moving from on-prem to a cloud setup by scrapping existing licenses and starting up with newer ones to fit the cloud model. Commonly used for adopting a SaaS-based version of an application that provides the same features of the application, though work in a cloud-based subscription model.

Strategic Purpose

This approach to cloud migration helps organizations migrate from a highly customized legacy environment to the cloud as effortlessly as possible with minimal risk. This involves retiring the current application platform by ending existing licenses and then renewing newer ones that support the cloud.

When to Choose this Strategy

A Repurchase strategy can be chosen when dealing with proprietary platforms or products not designed to operate on cloud infrastructure. A typical example is the migration of an on-prem HR application to Workday on the cloud or using a SaaS-based database service such as Airtable.

5. Retiring

This approach involves retiring or turning off parts of an organizational IT portfolio that are no longer useful or essential to the business requirements.

Strategic Purpose

Retiring specific services that are either redundant or a part of the legacy stack improves cost savings. This involves identifying applications, tools, or services that are no longer scalable or negatively impact other aspects of an efficient framework, including security, resilience, and interoperability.

When to Choose this Strategy

Organizations must choose this migration strategy after doing a thorough evaluation of all applications, IT services, and data management tools. A common rule of thumb is to evaluate retiring services with a valid business justification and not just for the goal to embrace modern technology.

6. Retaining

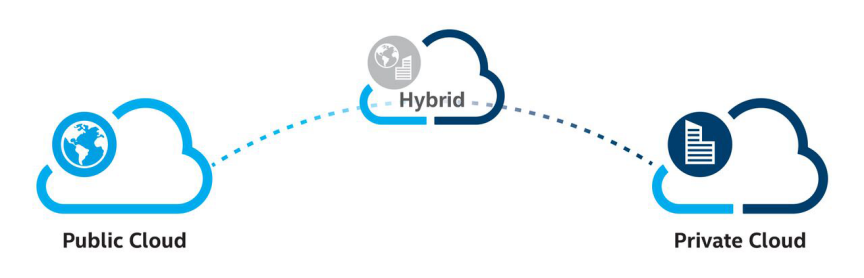

The Retaining migration strategy involves only migrating a part of the legacy applications, tools, and platform components that support migration to the cloud. Essentially this implies the components that cannot be Refactored or Retired are Retained within the on-prem setup, while the rest is migrated to the cloud.

Strategic Purpose

The retain approach to cloud migration allows organizations to maintain or keep parts of their IT portfolio on-premise while applying a part-migration method to run cloud applications.

When to Choose this Strategy

A Retaining migration model is often chosen alongside another migration strategy. Organizations with obligatory compliance or regulatory requirements that demand to store or run some aspects of their IT portfolio within certain regions or on-prem.

Key Considerations to Choosing a Cloud Migration Strategy

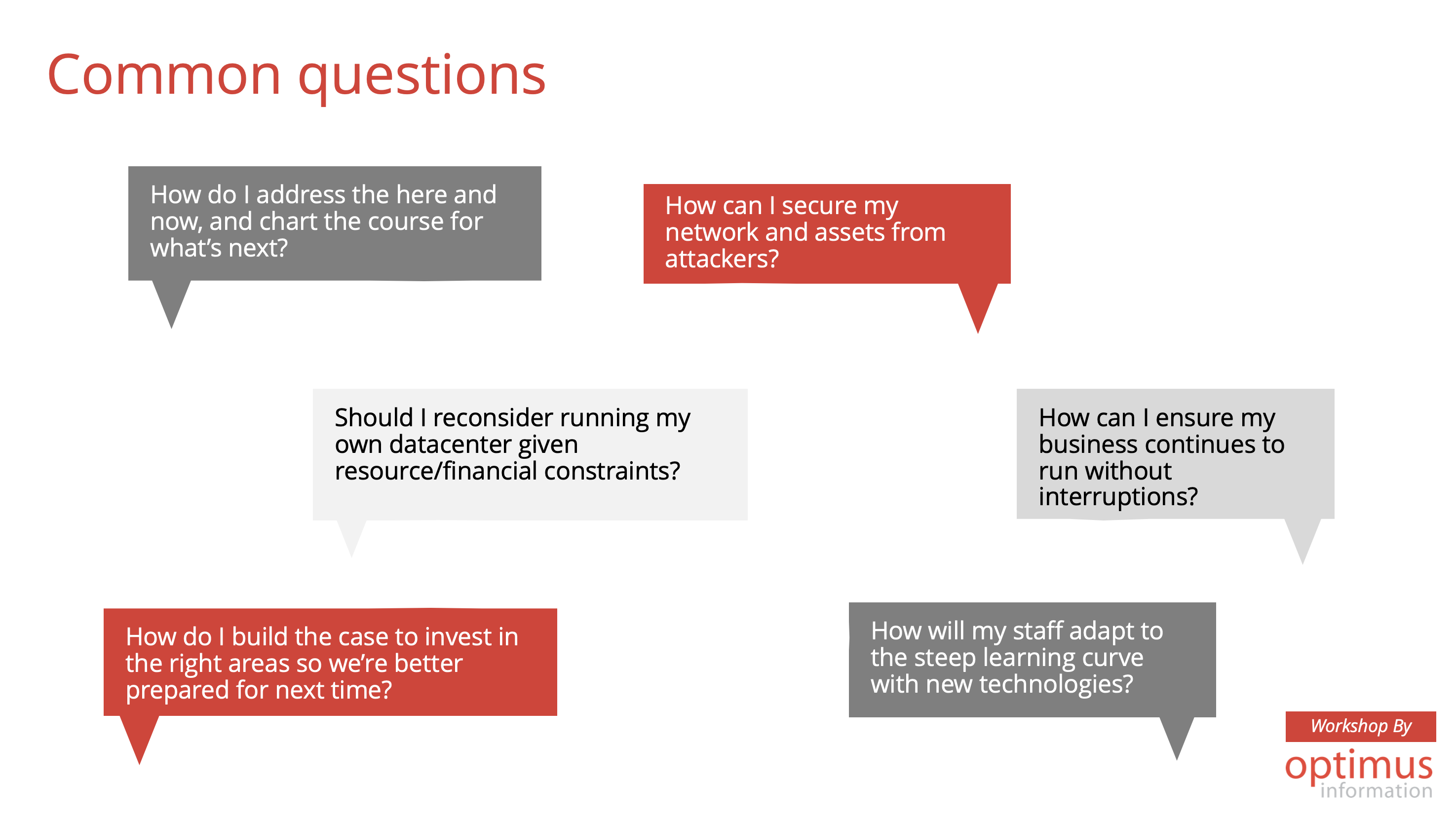

Choosing a migration strategy requires careful consideration of critical factors that have the potential to affect core business objectives. As a rule of thumb, it is advised that organizations consider the following before choosing one of the cloud migration strategies:

- Current on-premise workload: Although the cloud promises scalability and efficiency, it might not be suitable for running certain workloads without adequate refactoring. As a result, organizations must carry out a thorough analysis of what applications to migrate and in what order. This process helps to decide the most efficient way to migrate to the cloud.

- Security: The transition process poses unique security challenges and risks that organizations need to be cautious of. Being aware of such challenges helps organizations perform due diligence in choosing the right cloud provider and a migration strategy.

In the absence of the right skill sets and expert guidance, migrating to the cloud is never easy. This is particularly complex for organizations that are trying to take advantage of the cost-efficiency, scalability, and elasticity of a cloud model without knowing the unknowns.

At Optimus, we take pride in having supported several clients in their journey to digital transformation and migration to the cloud. Contact us today to know more.

Image source:

Image source: